How well can we fit BPM tilts in CESRv using Cbar12,22 data? Do the following:

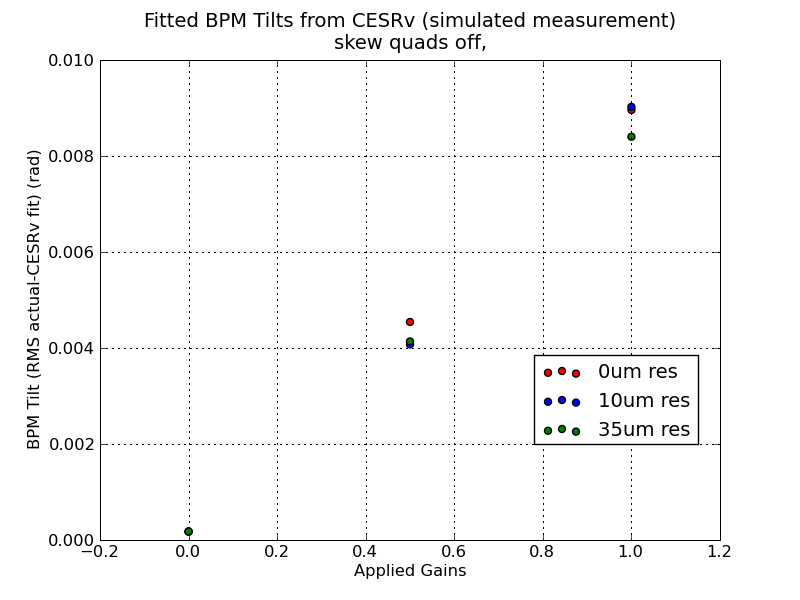

Plot these RMS tilt differences against the RMS applied gain, for resolutions of 0, 10, and 35 microns.

It appears that in this domain, resolution plays little or no role in determining how well we can fit the BPM tilts.

RMS (applied - CESRv fit) tilt = 16.9mrad. This is much larger than our theoretical tolerance of 10mrad.

How large of a residual Cbar12 can we get away with and still fit tilts to within 10mrad?

Note that each data point is a single seed in this plot, NOT averaged over ~50 seeds as per usual. Considering this is a "best-case" scenario, with no resolution or gain errors, we would expect the final requirement on coupling correction to put Cbar12 < 0.005.