D-Hadronic Meetings

Table of Contents

- 2010

- 2010-12-21

- 2010-12-14

- 2010-12-07

- 2010-11-30

- 2010-11-23

- 2010-11-16

- 2010-11-09

- 2010-11-02

- 2010-10-26

- 2010-10-19

- 2010-10-12

- 2010-10-05

- 2010-09-28

- 2010-09-21

- 2010-09-14

- 2010-09-07

- 2010-08-03

- 2010-07-27

- 2010-07-20

- 2010-07-13

- 2010-07-06

- 2010-06-29

- 2010-06-22

- 2010-06-15

- 2010-06-08

- 2010-06-01

- 2010-05-25

- 2010-05-18

- 2010-05-11

- 2010-05-04

- 2010-04-27

- 2010-04-20

- 2010-04-13

- 2010-04-06

- 2010-03-30

- 2010-03-23

- 2010-03-16

- 2010-03-09

- 2010-02-02

- 2010-01-26

- 2010-01-21

- 2010-01-14

- 2010-01-07

- 2009

2010

2010-12-21

-

Progress on Background Shape Systematics

-

Use the same lineshape functioin to fit sidebands:

Delta E sideband low figure Detla E sideband high figure Notice: for mode Kpi in the above fitting, the argus slope parameter ("xi") has changed the upper limit from -0.1 to 10. The fitting result are still very close to the limit, although there was no complain in the log file.

Then use the ARGUS parameters to fit signal region:

ARGUS para low figure ARGUS para high figure

Compare with last week:

- Delta E distribution for all modes: figure

-

Compare with previous uncertainties:

Mode 281/pb 818/pb Kpi 0.4 0.59 Kpipi0 1.0 1.05 Kpipipi 0.4 0.64 Kpipi 0.4 0.41 Kpipipi0 1.5 3.32 Kspi 0.4 0.91 Kspipi0 1.0 1.48 Kspipipi 1.0 1.26 KKpi 1.0 0.80 -

Try to fix Kpipipi0 mode:

Fix the signal yields to be 1, fitting status OK.

Delta E sideband low +–> fix N1, N2 figure Delta E sideband high +–> fix N1, N2 figure ARGUS para low +–> fix N1, N2 figure ARGUS para high +–> fix N1, N2 figure Compare the yields with the standard, still 3.9% difference.

-

Use the same lineshape functioin to fit sidebands:

2010-12-14

- Progress on Background Shape Systematics

Todo: Re-evaluating the sidebands.

DeltaE vs. mBC plot, see the correlation

Try to use the lineshape to fit.

2010-12-07

-

Checked the trigger bit meaning:

TriggerL1Data.h: v1.8: kElTrack = 0x10000, v1.10: kElTrack = 0x054002.

May be the reason for the old/new MC difference.

Todo: send email to Mats Selen.

-

Working on update the background shape:

We estimate the uncertainty in ST yields due to the background shape by repeating the ST fits with alternative background shape parameters. These alternative parameters are determined from the MBC distributions of events in high and low Δ E sidebands. For each mode, we fit each sideband with an ARGUS function to determine shape parameters and then repeat the ST yield fits with the ARGUS parameters fixed to these values. The resulting shifts in the ST yields are used to set the value of the systematic for each mode.

2010-11-30

-

CU Group Meeting Talk - December 2010 (PDF)

Comments:

- p1: on -> at

- p4: Check CLEO-c parameters with Steve Gray

- p9: Point the Argus Background, ISR -> long tail effect in the plot

- p10: Point the square-root scale for better signal/background display and same error bars every where.

- p15: a. reproducing the 281/pb result is non-trivial b. the 818/pb result is consistant with 281/pb, small errors.

- Overall: show plots of 818/pb analysis.

2010-11-23

-

Double DSCD interference

-

Detector simulation - π0 efficiency

-

Understand the two peaks in D0 → Kππ0 figure :

About 70% decay mode is : D0 → K- ρ

, ρ → π+ π0. The typical momentum of the particles involved in this decay:p(D0) = 250 MeV, p(K-) = 700 MeV, p(rho) = | p(D0) - p(K-) | = 450 MeV

So, p(pi) = 450-250 = 200 MeV, or 450+250 = 700 MeV.

Thus, we observe two peaks around 0.2GeV and 0.7 GeV.

-

Understand the two peaks in D0 → Kππ0 figure :

-

Calcuate the pi0 efficiency corrections

-

Trigger simulation

Previously we assign 0.2% and 0.1% to the highlited modes:

Used the two trigger bits:

bit 16: electron+track line in l1trig. bit 2: two track in l1trig2.

Still get 100% for all modes.

Calcuated the same efficiency for the old 281/pb signal MC:

| Mode | Trigger efficiency (%) |

|---|---|

| D0toKpi | 99.99 +/- 0.00 |

| D0toKpipi0 | 100 - 0.00 |

| D0toKpipipi | 99.50 +/- 0.03 |

| DptoKpipi | 99.48 +/- 0.03 |

| DptoKpipipi0 | 99.72 +/- 0.03 |

| DptoKspi | 99.73 +/- 0.02 |

| DptoKspipi0 | 99.40 +/- 0.04 |

| DptoKspipipi | 99.32 +/- 0.04 |

| DptoKKpi | 98.92 +/- 0.08 |

Not sure why the efficiencies are lower than before.

Todo:

- Think about the process of rho decay

- Put the equation in the pi0 efficiency study

- Check the trigger bit meaning in the old and new signal MC

2010-11-16

- Progress on the systematics

-

Trigger simulation

Previously we assign 0.2% and 0.1% to the highlited modes:

Now, redo the simulation for 818/pb signal MC:

100% efficiency for all modes:

No need to assign uncertainty.

Todo: need to use two tracks bit.

bit 16: electron+track line in l1trig. bit 2: two track in l1trig2.

-

Detla E requirement

Previously, we assign 1.0% for the diagonal double tags, and \(\sqrt{2}\cdot\) 0.5% for all other double tags.

Now, create the Δ E cuts table for double tags:

How to deal with this error?

Todo: think about background substraction.

2010-11-09

-

Evaluate the overall systematics

-

Signal shape (DT and ST)

We vary the parameter values of the signal line shape.

- Width of ψ(3770): +/- 2.5 MeV

- Mass of ψ(3770): +/- 0.5 MeV/c2

-

Blatt-Weisskopf radius: +/- 4 GeV-1

Combine the changes in the yields in quadrature to obtain the

ST signal:

Mode 281/pb (%) 818/pb (%) Δ 0 0.3 0.4 +0.1 1 0.5 0.5 0 3 0.7 0.5 -0.2 200 0.3 0.3 0 201 1.3 0.5 -0.8 202 0.4 0.4 0 203 0.5 0.5 0 204 0.6 0.5 -0.1 205 0.6 0.5 -0.1 DT signal shape: 281/pb: 0.2 818/pb: 0.2 (estimate)

-

Double DCSD interference

In the newtural DT modes, the CFD amplitudes can interfere with amplitudes where both D0 and D0bar undergo DCSD. This interfence is controlled by the wrong sign DCSD/CFD rate ratios (RWS) and relative phases (δ). If we assume common values of RWS and delta for the three D0 modes, then the relative size of the interference effect if Δ = 2RWS* cos(2δ). RWS = 0.004, yield uncertainties 0.8% (2 x 0.004 = 0.008).

Need to check the latest value of the RWS.

Todo: Talk to Werner and Jim with correction.

-

Detector simulation - Tracking and KS efficiencies

A tracking efficiency systematic uncertainty e(charged) of 0.3% is applied to each K candidate and each π candidate.

An additional 0.6% tracking systematic uncertainty is applied to each K± track.

A KS0 reconstruction efficiency systematic uncertainty of 1.8% is applied to KS0 candidates.

From CBX 2008-040 (Determination of Tracking Efficiency Systematics with Full 818 pb−1 Dataset), "We suggest, for pions, a systematic of 0.3% per pion track. For kaons, if the momentum spectrum averages to about 500 MeV, we suggest a systematic of 0.6% per kaon. "

From CBX 2008-041 (Determination of KS0 Efficiency Systematic with Full 818 pb−1 Dataset): "We suggest using a 0.8% systematic uncertainty for each KS0, in addition to track-finding systematics for the two pion tracks."

-

Detector simulation - π0 efficiency

We find a small bias and correct for it by multiplying the efficiencies determined in Monte Carlo simulations by 0.961n, where n is the number of reconstructed π0s in each final state.

We assign a correlated systematic uncertainty of 2.0% to each π0.

From CBX 2008-029 (π0 Finding Efficiencies): "We have studied π0 finding efficiencies in data and Monte Carlo and recommend that CLEO-c analyses which use the standard π0 cuts apply a εdata/εMC = 0.94 correction to their efficiencies. While this correction is stable with π0 momentum, the uncertainty on the correction varies from 2% at low momentum to 0.7% at 1 GeV. For modes considered by the Dhadronic analysis, the uncertainty on the correction is 1.3%, but the uncertainty that should be applied to a given analysis may be larger or smaller than this number, depending on the π0 momentum spectrum of that analysis. "

Todo: Check the π0 momentum in D modes.

-

Detector simulation - Particle identification efficiencies

Particle identification efficiencies are studied by reconstructing decays with unambiguous particle content, such as D0 → KS0 π

π- and φ → K K-.We also use D0 → K π+ π, where the K- and π+ are distinguished kinematically. The efficiencies in ata are well modeled by the Monte Carlo simulation with small biases.

We correct for these biases by multiplying the efficiencies determined in Monte Carlo simulations by 0.995l × 0.990m, where l and m are the numbers of PID-identified π±s and PID-identified K±s, respectively, in each final state.

We assign correlatd uncertainties of 0.25% and 0.3% to each π± and K±, respectively.

-

Lepton veto

In events with only two tracks we required D0→ K- π

ST candidates to pass additional requirements to eliminate e e → e+ e γ γ, ee- → μ μ- γ γ, and cosmic ray muon events. These requirements eliminate approximately 0.1% of the real D0 → Kπ candidates, and we include a systematic uncertainty of 0.1% to D0 → K- π+ ST yields to account for the effect of these additional requirements. -

Trigger simulation.

Most modes are efficiently triggered by a two-track trigger. However, in the modes D0 → K- π

π0 and D→ KS0 π+, Monte Carlo simulation predicts a small inefficiency (0.1% – 0.2%) because the track momenta may be too low to satisfy the trigger or because the KS0 daughter tracks may be too far displaced from the interaction region.For these two modes, we assign a relative uncertainty in the detection efficiency of the size of the trigger inefficiency predicted by the simulation.

Todo: Check the ntuple, Low Track for mode with π0.

-

|E| requirement.

Discrepancies in detector resolution between data and Monte Carlo simulations can produce differences in the efficiencies of the Δ E requirement between data and Monte Carlo events.

No evidence for such discrepancies has been found, and we include systematic uncertainties of 1.0% for D

→ KS π π0 and D+ → KK- π decays, and 0.5% for all other modes. -

Background shape

We estimate the uncertainty in ST yields due to the background shape by repeating the ST fits with alternative background shape parameters. These alternative parameters are determined from the MBC distributions of events in high and low Δ E sidebands. For each mode, we fit each sideband with an ARGUS function to determine shape parameters and then repeat the ST yield fits with the ARGUS parameters fixed to these values. The resulting shifts in the ST yields are used to set the value of the systematic for each mode.

Todo: Repeat.

-

Final-state radiation.

In Monte Carlo simulations, the reduction of DT efficiencies due to FSR is approximately a factor of 2 larger than the reduction of ST efficiencies due to FSR. This leads to branching fraction values larger by 0.5% to 3% than they would be without including FSR in the Monte Carlo simulations. We assign conservative uncertainties of ± 30% of the FSR correction to the efficiency as the uncertainty in each mode. This uncertainty is correlated across all modes.

Todo: Check the Heavy Flavor Average Group: Lorrence's approach for FSR.

-

Resonant substructure

The observed resonant substructures of three- and four-body decay modes in our simulations are found not to provide a perfect description of the data. Such disagreements can lead to wrong estimates of the efficiency in the simulation. We estimate systematic uncertainties fo rthe three- and four-body modes from the observed discrepancies. The uncertainties in efficiency are not correlated between modes, but the correlations in systematic uncertainties for the efficiency of mode i are taken into account in εi, εij, and εii.

Todo: Will repeat this process.

-

Multiple candidates

In our event selection, we chose a single candidate per event per mode. So, in general, because the correct candidate was not always chosen, our signal efficiencies depend on the rate at which events with multiple candidates occur. Using signal Monte Carlo samples, we estimate the probability of choosing the wrong candidate, P, when there are multiple candidates present.

We also study the accuracy with which the Monte Carlo simulations model the multiple candidate rate, R, in data. If P is nonzero and if R differs between data and Monte Carlo events, then the signal efficiencies measured in Monte Carlo simulations are systematically biased; if only one of these conditions is true, then there is no eficiency bias.

Based on teh measured values of P(Rdata/RMC - 1), we assign the systematic uncertainties to ST effieiencies. For each decay mode the multiple candidate systemtic is correlated between teh D and \bar{D} decay for single tags.

- Todo: Repeat and may ask Peter.

-

Luminosity.

For the e+ e- → D \bar{D} peak cross section measurements, we include additional uncertainties from the luminosity measurement (1.0%).

Not going to change.

-

Signal shape (DT and ST)

2010-11-02

-

CLEO PTA Talk for November 2010 (PDF)

Comments:

p3: Add sub items for the details. Since the May CLEO meeting in the subtitle. Put two or three plots such as the reference modes, Ks 3 pi.

External backgrounds: some words to explain.

Peaking backgrounds plots, the internal modes are very small.

p4: Change the label(281ipbv0, v7) , mention the different parameters. font size.

p5: font size.

p6: review on the syst, in stead of specific items.

-

Question about the Δ E cuts systematics:

- Which category of the systmatic errors shall we improve? Make a final list evaluate which one is worth to improve. Go back to the previous procedure and evaluate.

2010-10-26

-

Update on the CBX

- Updated the PDG 2010 for the external background study

- Added double and single tags figures (not for the generic single tags, still running)

- Systematics Study Done the variation for the lineshapes, noFSR, and Wide Delta E

-

Plan to give an update on CLEO meeting on Nov. 5th.

- Update on the external backgrounds absolute value?

2010-10-19

-

Update on the CBX

- Added section "What's new"

- Updated the figures for bkg (genreic, cont, tau, radret)

- Updated the BF fit results table, including the luminosity for 818/pb

- Todo: Update the bkg efficiency table Update the generic MC fitting

2010-10-12

-

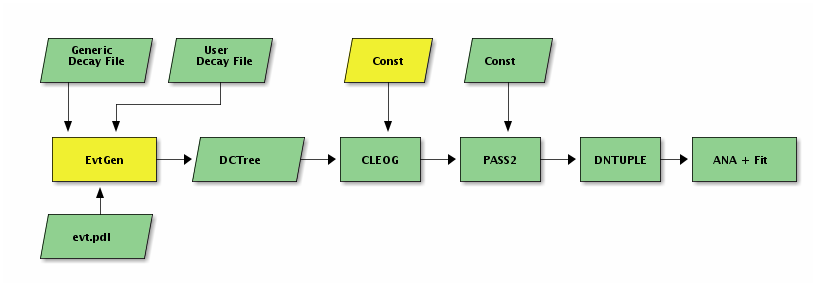

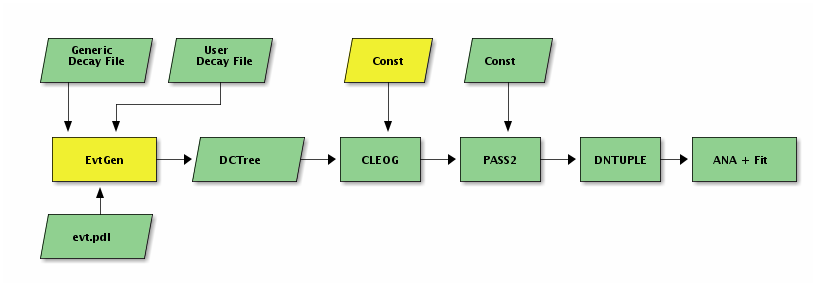

Test the SL5

After setting the same random seeds for both CLEOG and Pass2, I got the same number of D candidates.

Generate exact the same MC by setting the same random seeds.

Mode Dp to Kspipipi SL4 SL5 Numbers generated (CLEOG, Pass2, DSkim) 3931 3931 Numbers of D candidates 1475 1475

-

Draft of CBX avaliable at: Page.

- V1.23: Update the branching fraction fit results tables

- V1.21: Update the paragraphs and most of the tables up to Section "Backgrounds".

-

Question:

Found "nan" value in the branching fit:

sigma(D0D0bar) = 10637.9 +- 28.7876 (stat) +- 164.145 (syst) sigma(D+D-) = 8390.41 +- 29.5951 (stat) +- 127.355 (syst) Correlation coeff between sigma(D0D0bar) and sigma(D+D-): 0.721828 sigma(DDbar) = 19028.3 +- 41.2868 (stat) +- 273.165 (syst) chg/neu = 0.788725 +- 0.0103848 (stat) +- nan (syst)

The raw output can be found at: here.

-

Todo:

- Finish the Backgrounds section

- Finish the Generic MC fitting

-

Update 818 lumi

grep 281 data* -r data_crosssectionsdef:281.53 data_statonly_crosssectionsdef:281.53 data_yields_for_werner:281.6195574352

- Fix "nan" error

2010-10-05

- Backgrounds study

- Add no crossfeeds cuts figure

- Backgrounds components:

-

Fit the background for the 537/pb generic MC (20xlumi)

With crossfeeds: figure

With no crossfeed: figure

Just more entries observed, no additional new features found.

Will move on to 818/pb case.

-

Test the SL5

Generate exact the same MC by setting the same random seeds.

Mode Dp to Kspipipi SL4 SL5 Numbers generated (CLEOG, Pass2, DSkim) 3931 3931 Numbers of D candidates 1475 1471 Found 4 events difference!

Compare the unique events for SL4 and SL5 => table

There are 14 unique events in SL4 and 10 unique events in SL5 case.

How deep shall we dig into? Open to suggestions…

- Todo:

- Add seperate value for Χ2 and Ndof

- Set random seeds in Pass2.

- Release the first draft of CBX.

2010-09-28

-

Backgrounds study

-

Use fixed width from 281/pb data fitting for the gaussian

Add χ2 / N d.o.f in the plots (extracted from frame): figure

For comparison, also fitted with float width: figure

We can see that having a fixed value for the gauss shape makes the fitting more reliable.

However, there are still one mode which has Χ2/ndof large than 20.

Also, we noticed that some yields are reaching the 1000 limit, but don't know the reason.

-

Fit without Gaussian signal: figure

This fitting is done by setting the parameter 'yld' fixed as 1, namely only one yield. (I tried to set it as 0, but failed, Jim suggested me to use 1 and it worked.)

If we compare this group of figures with the previous one (figure), we are seeing a smaller Χ2/ndof. Intuitively, the fitting with less free parameter should not be better than the one with more free parameters.

This might have something to do with the fitting procedure in the previous one, where we saw some modes have 1000 limit on the yld.

- Above all, the peaking feature in mode Kpi are still there. What shall we do for this?

-

Use fixed width from 281/pb data fitting for the gaussian

-

Todo:

- Add crossfeed cuts

- Check the yld limit (2000)

- Look up the MCTruth for klv

- Plot the Δ E

2010-09-21

-

Backgrounds study

Reproduced the generic backgrounds fitting: figure (from last week figure )

Parsed the backgrounds components:

- Ran interactive job on SL5

-

Todo:

- Fit without the gaussian

- Compare the χ2

- Start with the non-gaussian part and use those parameters as input to see if it works.

- Fix the width of the modes as the value from data

- Detailed test on the SL5 machine

2010-09-07

-

Backgrounds study

- Done: creating the ntuple for the 537/pb generic-mc-ddbar sample by truncating to multipl jobs based on the runs

- Done: creating the ntuple for the 281/pb continuum, raditive return, and tau-pairs MC samples in the latest software environment.

-

In progress: fit the generic ddbar MC for 281/pb, reproduce

the figure 72 from our dhadcbx281.

Started from Peter's script:

/nfs/cor/user/ponyisi/hadD/summerconf/bkggeneric.py

Also found another file:

bkggeneric-dfix.py, learning the details. - Plan: reproduce all of the background plots for 281/pb from the cbx, then apply the same procedure on the 537/pb MC, finally for the full sample 818/pb.

2010-08-03

-

Backgrounds study

-

Ran the 5xlumi continuum for 281/pb => table (info)

Normalized table: => table (info)

Read the previous CBX, need to do the mBC fit to check the peaking background on continuum, radiative return, and τ-pairs

-

Ran the Ntuple on the 537/pb ddbar generic sample.

There are unfinished root file due to the large data sample and was terminated by the server after running for more than two days.

Need to break down the runs and submit to multiple jobs.

-

Ran the 5xlumi continuum for 281/pb => table (info)

2010-07-27

-

Backgrounds study

- Backgrounds for the generic MC (10xlumi for original 281/pb data) => table Listed the first 20 modes contributing to the backgrounds.

- The DCSD decays has been taken into consideration for neutral D's.

- Todo:

2010-07-20

-

Backgrounds study

- Made ntuple for the original generic MC (dtag v1 10xlumi, 281/pb data, size 24G)

- Made ntuple for the new generic MC (dtag v2, 20xlumi, 818/pb data, size 140G)

- Analyze the faking signal: table

-

Todo:

- Include the charge conjugate mode for neutral D

- List the modes name for >1000

- Look at the continuum MC

2010-07-13

-

Found the difference for the data yields in 281/pb (table)

- Some events were not availiable in eventstore in 20060208 for dtag but accessible in 20070822. I have tried to use the "goto" feature in suez, they comfirmed that no events were processed. This can explain the increase for the extracted yields in table.

- I checked the mBC distribution of those events, and found they are just "normal" events. For example mode D0toKpi: figure

-

I used different line shape parameters for mBC fitting

Parameter 281ipbv0 281ipbv9 Gamma 0.0286 0.0252 R 12.3 12.7 Mres 3.7718 3.7724 Once I use the old parameters and fit the old data in the new environment, I got a consistent result. table

-

Now, fit new data in new env with old parameters: table

This time, it reflex the in crease of the yields befor mBC fitting.

-

Question:

-

How are we going to deal with these difference in data?

Systematic error?

A: Not error, since we have taken care of the lost luminosity in the original measurement.

-

Should we use the same line shape parameters (Gamma, r, mass)

as the new MC?

A: As we keep it consistent, the only thing matters is the associated systematic errors. So, I'll just move on with the new line shape parameters we use for the new signal MC.

-

How are we going to deal with these difference in data?

Systematic error?

-

Todo:

-

Check the DTag page for the missed jobs. Here is the first

posting on the HyperNews:

https://hypernews.lepp.cornell.edu/HyperNews/get/DTag/23.html

-

Check the DTag page for the missed jobs. Here is the first

posting on the HyperNews:

- Run the generic, make mBC fitting for the wrong MC in the MCTruth

2010-07-06

-

Getting event details for those listed in table

- Skimmed the data ntuple by using the "goto" feature in suez

- Tested the 90 events which are unique in the new data on the old data skim, should expect 0. However, all of them were selected! It means that the selection scripts after ntuple was all the same, but those events may not stored in the old ntuple.

- Ran the test to check the 90 events for all of the old ntuple data, but found none of them!

- Now the question is why those events get cut off when not using the "goto" feature in suez?

-

Check the dataset distribution for the unique events => table

It looks like the unique events are mostly distributed in data36, since we are using the same timestamp for the datasets in the eventstore, there is no obvious reason to explain this behavior, any suggestions?

-

Todo

Create the table for the run with events list, and send the logfile link to the DHad HyperNews.

Logfiles link: 281ipbv0 , 281ipbv7

Data36: 281ipbv0: http://www.lepp.cornell.edu/~xs32/private/DHad/dat/data/2.1/dtuple-data36.txt

281ipbv7: http://www.lepp.cornell.edu/~xs32/private/DHad/dat/data/10.1.7/dtuple-data36.txt

2010-06-29

-

Getting event details for those listed in table

First try, read the data sample on the fly but very slow.

Second try (last week), use ROOT scripts (MakeClass) to Skim the Ntuple based on the events, still very slow.

Thrid try, using suez goto feature to skim, very fast.

Now, have the skimed ntuple and ready to explore the cuts.

2010-06-22

-

Getting event details for those listed in last week's table

Due to the large number of events in data sample, it's very time cosuming to do it on the fly. I'm working on to Skim the ntuple based on those unique events and then study the cuts.

Now, the C++ scripts to read the events info and output to the Ntuple is done, need to run on the data sample.

2010-06-08

-

Fit mBC with original parameters for data and signal MC

- Compare the yields between 281ipbv0 and 281ipbv8 for 281/pb data => table

- Compare the yields between 281ipbv0 and 281ipbv8 for 281/pb singal MC => table

- Compare the diffs from the above two tables => table

- Conclusion: the momentum resolution parameters are not the reason for the difference in yields.

- Todo: Check the selected events before the mBC fitting, event by event.

-

Check the double tag numbers:

There are three modes which has different generated numbers for double tags.

FileName Original New Double_D0_to_Kpipi0__D0B_to_Kpipi0 3674 2000 Double_D0_to_Kpipi0__D0B_to_Kpipipi 2023 2000 Double_D0_to_Kpipipi__D0B_to_Kpipi0 2023 2000 These differences had been considered into the efficiency calculation.

-

Check the background scale up

There are two files used for the BF fitting:

data_statonly_external_bkg_bfs_for_werner

Notice the second line after the name are all zero, so I guessed that line means systematic.

data_external_bkg_bfs_for_werner

Applied the factor on both cases.

2010-06-01

-

Done with restoring pi0 and Kshort cuts to DSkimv1

-

281/pb

- Compare the yields between 281ipbv0 and 281ipbv7 for 281/pb data => table

- Compare the yields between 281ipbv0 and 281ipbv7 for 281/pb signal MC => table

- Compare the diffs from the above two tables => table

- Compare the Branching Fractions => table

- The momentum resolution parameters for 281ipbv0 => table

- The momentum resolution parameters for 281ipbv7 => table

-

281/pb

- 537/pb

-

818/pb

- Compare BF with 281ipbv7 => table

-

What should be done next?

-

Systematics:

- Single Tag Background modeling

- Double DCSD interference

- Deterctor simulation

- Lepton veto

- Trigger simulation

- Final state radiation

- |Δ E| requirement

- Signal line shape

- Resonant substructure

- Multiple candidates

-

Systematics:

- Anything else?

-

Todo

- [X] Add links of the parameters to today's meeting page

- [X] Fit mBC with original parameters for data and signal MC

- [X] Check the double tag generated numbers

- [X] Check the background scale up also applys to error for 537/pb

- [X] Change the BFs table 10E6 to 1E6

- Change the NDDbar in BFs table dived by 1.91 for 537/pb

2010-05-25

-

Progress on restoring pi0 and Kshort cuts to DSkimv1

- Re-ntupled the data and single tag MC by adding the variable "isType1Pi0" as suggested by Peter last week. It does the work very well.

- Updated the selection scripts using this new variable to match the Dskimv1 pi0

- Updated the scripts to use the 3-sigma mass window for Kshorts.

- Working on the double tags MC of the 281/pb, will give the yield efficiency as well as the branching fractions by next week.

2010-05-18

-

Progress on restoring pi0 cuts to DSkimv1

- Read the doc about Dskimv1 and v2

-

Confirmed that the difference is the E9/E25

The v1 used "E925Scheme" default value 1 (both showers must satisfy 1% E9/E25 cuts, uses Unfolded-E9/E25 if the 2 showers are closer than 25cm)

The v2 used 0, means no E9/E25 cuts

- Tried to implement this same cut on the ntuple, but after talking with Brian, he pointed that the cuts is non-trivial as stated on the manual. So, I'm working on re-skim the files now.

2010-05-11

-

Now: Working on using the DSkimv1 cuts on the Dskim v2 sample

pi0 and Kshort

- Next: find out the neutral D branching fractions discrepancies in 818/pb data

- Future: Study the backgrounds in the DSkimv2 between DT and MC.

2010-05-04

-

Fixed the problem of crossfeeds matrix

In the original script:

a = float(st.split()[-2]) b = float(st.split()[3]) # signal MC yield/error on yield if a > 0 and abs(1-b) > 3 and\ idstring not in attr.veto_fakes_string_list:veto_fakes_string_list = ['D0_to_Kpipi0 fakes Dp_to_Kpipi:', 'D0_to_Kpipi0 fakes Dp_to_Kspipi0:', 'D0B_to_Kpipipi fakes Dm_to_Kpipipi0:', 'Dp_to_Kpipi fakes D0B_to_Kpipipi:']The criteria of "a > 0 and abs(1-b) > 3" are effectively only select Kpi for the matrix.

However, in the new MC, that criteria is no longer effective, it picks up more elements in the matrix.

The updated script using to make sure only the Kpi is used in the matrix.

used_crossfeeds = ['D0_to_Kpi fakes D0B_to_Kpi:', 'D0B_to_Kpi fakes D0_to_Kpi:']

To see this is taking the effect, one can see the following tables.

- 281ipbv0: The original one (PRD 2007)

- 281ipbv5: Using the latest CLEO software release for data 281/pb

- 281ipbv6: Same as 281ipbv5, only consider two crossfeeds

| Label | 281ipbv0 |

|---|---|

| 281ipbv0 | |

| 281ipbv5 | table |

| 281ipbv6 | table |

-

The new results for 537/pb and 818/pb data using the right matrix

- 281ipbv0: The original one (PRD 2007)

- 537ipbv6: Using the latest CLEO software, only use Kpi crossfeeds, scale up bkgs for absolute

-

818ipbv6: New CLEO software v5, only use Kpi crossfeeds, scale up bkgs for absolute

Label 281ipbv0 281ipbv0 537ipbv6 table 818ipbv6 table Noticed large difference in the 818/pb case for the D0s.

- Draft slides for the CLEO PTA talk (PDF).

2010-04-27

-

Crossfeeds fitting - difference with Peter's

- 7.06: The original one (PRD 2007)

-

281ipbv0: Using Peter's crossfeed result (

yields_and_efficienciesfile) - 281ipbv0.1: Using Peter's crossfeed evt file as input, fit with ROOT4.03, RooFitv1.92

-

281ipbv0.2: Using Peter's crossfeed evt file as input, fit with ROOT5.26, RooFitv3.12

Label BFs compare with PDG2004 7.06 table 281ipbv0 table 281ipbv0.1 table 281ipbv0.2 table Compare with 7.06:

https://www.lepp.cornell.edu/~xs32/private/DHad/t10.1#281ipbv0

-

Preliminary BF results for the full data sample

-

Processed the 281/pb and 537/pb sample with the old systmatic errors

Figures:

Type 281ipbv5 537ipbv5 818ipbv5 Resolution parameters figure figure figure Signal MC single tags figure figure figure Signal MC double tags figure figure figure Data single tags figure figure figure Data double tags figure figure figure Tables:

Label BFs compare with PDG2004 7.06 table 281ipbv5 table 537ipbv5 table 818ipbv5 table

-

Processed the 281/pb and 537/pb sample with the old systmatic errors

Questions:

- Why larger D2KsPi for 281/pb?

-

Why 818/pb has lower D2KPi BF?

N1 (MC) N2 (MC) N1 (Data) N2 (Data) 281/pb 40724 41510 25726 26204 537/pb 81525 82518 49545 49468 818/pb 122248 124031 75252 75656 (281/pb+537/pb)-818/pb 1 -3 19 16

-

Talk for next week (response to Werner)

Update on the 818/pb D-Hadronic analysis

- Todo:

- Check the crossfeeds with Peter, send him the file link for fitting

- Read the output for the BF fitter

- Check the background file not using the absolute number

- Make the slides by next week

2010-04-20

-

Updating the

yield_and_efficienciesfile.- Fixed the plotting error by using the RooFit C++ interface, instead of PyROOT.

- Also works on the default CLEO ROOT(4.03) and previous RooFit(1.92)

-

Fitting plots

Label Diagonal Non-Diagonal Peter's figure figure My Version with ROOT4.03 figure figure -

Notice some difference in the Non-Diagoal fitting, while the

Diagonal fitting are very similar (the last two columns).

Mode Peter's My Version Parameter fit (Peter) Parameter (my) D0B_to_Kpipi0 fakes Dm_to_Kspipi0 fig fig fig fig Dm_to_Kpipi fakes D0B_to_Kpipi0 fig fig fig fig Why such difference ? => See CBX p19 (PDF)

-

Fit the Branching Fractions:

- The original one (7.06, PRD 2007): => table

-

Branching Fraction: Using Peter's

yields_and_efficienciesfile => table -

281ipbv0.1 : Using Peter's crossfeed evt file as input, fit with ROOT4.03, RooFitv1.92 => table

- Compare with PRD 2007 (281ipbv0) => table

-

281ipbv0.2 : Using Peter's crossfeed evt file as input, fit with ROOT5.26, RooFitv3.12 => table

- Compare with 281ipbv0.1 => table

- Process on the 537ipb => done with the mBC fit, see diagram.

2010-04-13

-

Updating the

yield_and_efficienciesfile.- Fixed previous crash by installing ROOT5.26 and Python2.6

- Using the same input from Peter and can reproduce the fits

Peter's plot for one mode:

My plot for the same mode:

-

The only difference is the background can not be ploted as stated in the message after fitting:

[#0] ERROR:Plotting -- RooAbsPdf::plotOn(sumpdf_float) ERROR: component selection set = does not match any components of p.d.f.

- Next: Updating the yields and efficiencies and update the BFs.

2010-04-06

-

Run BF fitter on 281/pb - now can reproduce the previous results

-

Found the difference:

I used different generated numbers for Several modes in Double Tags:

Modes Original New Double_D0_to_Kpipi0__D0B_to_Kpipi0 3674 2000 Double_D0_to_Kpipi0__D0B_to_Kpipipi 2023 2000 Double_D0_to_Kpi__D0B_to_Kpi 2000 2000 Double_D0_to_Kpipipi__D0B_to_Kpipi0 2023 2000 Also, there was a slight change in my script where one argument's default value used to be 100, but changed to 1. When setting to 100 specifically, the difference went away.

-

Found the difference:

-

Updating the

yield_and_efficienciesfile.Tried to use Peter's backgrounds evt file and do the fit as shown in his script: (link)

/nfs/cor/user/ponyisi/hadD/summerconf/stupidcrossfeedplot.py

But the fitting aborted, see the log file.

2010-03-23

-

Processed the 281/pb Data and MC with new momentum resolution

parameters and the New DDalitz structure for Kspipi0

- Fit diagonal double tags floating momentum resolution parameters => figure

- Fit signal MC => figure ORG-LIST-END

- Fit data => figure

- In progress : 537/pb Data and MC

-

Todo:

- Get the 281/pb Branching Fractions with the original systematics

- Get the 537/pb Branching Fractions with the systematics

2010-03-16

-

Process the Data and MC in new env

- New Lineshape parameters (Mass, Width, R)

-

DSkim version 2

-

Question:

-

What's the meaning of "FAILED" in the MINUIT process? Does that matters?

A "FAILED" example log file. Compared with a "CONVERGED" example log file. - Not a big problem.

- For a meaningful comparison of the 281/pb results between the original and new environment, shall we use the same momentum resolution parameters (fa,sa,fb,sb …) determined by fitting the diagonal double tag MC, or should we generate the double tag MC in the new environment and do a complete new process? - Yes.

- As Dskimv2 has looser cuts on π0 and KS, should we tighten them up to match the original Dskimv1 results? - Need to understand the process.

-

What's the meaning of "FAILED" in the MINUIT process? Does that matters?

2010-03-09

-

Process the 537/pb data in new environment (20080228_FULL)

It incluedes the new compiled

HadronicDNTupleProcand RooFitModels-

Compare the yields for data 537ipb between original and new => table

Message from Anders:

I see that there is a change in the D yields. I wanted to confirm that there is also a change in the overall number of entries in the plot you are fitting. (I would guess that this is the case as very clean modes like Kpi seems to be almost as strongly affected more 'dirty modes.) If it is the case that you clearly have less entries in the histogram I wonder if you have added code to protect against large events etc and you are now throwing away some number of events.

-

Compare the input evtfile for mBC fit for data 537ipb between two releases

Mode New Events 0 0 1 444 3 591 200 37 201 836 202 18 203 2743 204 6493 205 19 The new events indicates that the

HadronicDNtupleProccode do have difference between two releases. -

Noticed that the fitting was failed when using the new parameter to fit the original data.

7.06/537ipb 537ipb Mass 3.7718 GeV 3.7724 GeV Width 0.0286 GeV 0.0252 GeV R 12.3 12.7 Use the same evtfile as input and do the fit using the original parameters:

=> table

- Question: which parameter shall we use?

-

Compare the input evtfile for mBC fit for data 537ipb between two releases

-

Compare the yields for data 537ipb between original and new => table

2010-02-02

- Turn off the dskim in the DNtuple process for signal MC - Label "537ipbv2"

-

Run 281/pb data with eventstore 20070822 (DSkim v2)

- Fixed the job aborting problem by commenting out the "missing mass calculation"

-

Todo:

- [] Process the data 281/pb and 537/pb

- [] Process 281/pb MC in Dskim v2

2010-01-26

-

Turn off the dskim in the DNtuple process for signal MC

- Script: dskim.tcl in DSkim release 20060224_FULL_A_3

-

Error in dtuple.txt:

Suez.loadHadronicDNtupleProc> go %% INFO-JobControl.SourceManager: Using myChain for active streams: beginrun endrun event startrun %% INFO-JobControl.SourceManager: Defined active streams. ERROR: Suez caught a DAException: "Starting from HadronicDNtupleProc we called extract for [1] type "FATable<NavPi0ToGG>" usage "TagDPi0" production "" <== exception occured

-

Run 281/pb data with eventstore 20070822 (version 2 Dskim)

-

Debug NTuple code for "unknown daughter mode" when processing Data

From HadronicDNtupleProc.cc:

int nDau = tagDecay.numberChildren(); const STL_VECTOR(dchain::ReferenceHolder<CDCandidate>) & vect = tagDecay.children(); assert(nDau <= 5); for (int dau = 0; dau < nDau; dau++) { const CDCandidate& cddau = *(vect[dau]); int index = -1; if (cddau.builtFromCDKs()) { index = indexInKsBlock(cddau.navKshort()); assert(index >= 0); } else if (cddau.builtFromCDPi0()) { index = indexInPi0Block(cddau.navPi0()); assert(index >= 0); } else if (cddau.builtFromTrack()) { index = indexInTrackBlock(cddau.track()); assert(index >= 0); } else if (cddau.builtFromCDEta()) { index = indexInEtaBlock(cddau.navEta()); assert(index >= 0); report( DEBUG, kFacilityString ) << "While finding D daughters: Eta daughters trying!" << endl; } else { report( NOTICE, kFacilityString ) << "While finding D daughters: unknown daughter mode" << endl; }

-

Debug NTuple code for "unknown daughter mode" when processing Data

-

Todo:

-

[X] Turn off the dskim in the DNtuple process by removing the

ISTVAN_MC_FIX - [] Debug NTuple code for "unknown daughter mode" - comment out the Ds modes

- [] Investigate π0 and KS cuts

- [] Understand the luminosity calculation

-

[X] Turn off the dskim in the DNtuple process by removing the

2010-01-21

-

Last week:

- Compare the 537ipb yield with 281ipb in signal MC => table

- Generate signal MC for 537ipb with new run numbers and process with DSkim after Pass2 - label: "537ipbv2"

- D mass comparison table for 281/pb data original and default => table

- Run 281/pb data with eventstore 20070822 (DSkim v2)

-

Todo:

- [X] Turn off the dskim in the DNtuple process

- [X] Fix the EBeam talbe in the "Grand Comparison Table" and send to DHad HN

- [X] Restore the ISR plots to explain the lower D mass fitting value

- [] Debug NTuple code for "unknown daughter mode"

- [X] Investigate π0 and KS cuts

- [] Understand the luminosity calculation

2010-01-14

- Compare the EVT.PDL file

- Discussion of the fitting plots of data, original, and default

- Fitting 537ipb signal MC => plots

- Compare the 537ipb yield with default 281ipb in signal MC => table

- Compare the ratios of 537ipb/281ipb in Data and MC => table

-

Todo:

- [X] Make the D mass comparison table

- [X] Select runnumber based on luminosity

- [X] Check DTag version for Data and MC

2009

2009-12-17

-

Trace the source of the MC difference

-

Generate more (3X) events in mode D+ → K0S pi+ pi+ pi- -> Link

Processing the

CLEOG&PDL&DEC…

-

Generate more (3X) events in mode D+ → K0S pi+ pi+ pi- -> Link

- Process new (537/pb) data sample

2009-12-10

-

Trace the source of the MC difference

-

For the

EvtGenBasepackage:"I don't think that any these changes would affect anything you are doing" – Anders

-

For the

EvtGenModelspackage:Possible relevant changes:(Full ChangeLog):

2006-01-10 16:35 ryd * Class/EvtPHOTOS.cc: Addes constructor to EvtPHOTOS so we can controll what photons are called that are generated by PHOTOS.2006-01-11 15:58 ryd * Class/EvtVPHOtoVISR.cc: Updated the lineshape code for the psi(3770) 2006-01-15 22:20 ryd * Class/: EvtKKLambdaCFF.cc, EvtModelReg.cc: Updated model registry. 2006-01-25 14:14 ryd * Class/: EvtModelReg.cc, EvtSLBKPole.cc, EvtSLBKPoleFF.cc: New model for 'BK' pole form factors 2006-12-13 10:59 ponyisi * Class/EvtModelReg.cc, Class/EvtVPHOtoVISRHi.cc, EvtGenModels/EvtVPHOtoVISRHi.hh: Add Brian Lang's code for vpho -> psi(xxxx) gamma ISR process 2006-12-14 12:22 ponyisi * Class/EvtVPHOtoVISRHi.cc: Revert VPHOtoVISRHi to MC-determined probMax 2007-02-26 11:24 ponyisi * Class/EvtDDalitz.cc: Flip sign of rho amplitude for D+ -> K0 pi+ pi0 2007-03-26 23:32 pcs * Class/EvtISGW2.cc: setProbMax() for updated D and Ds semileptonic decays 2007-04-02 23:28 ponyisi * Class/EvtVPHOtoVISRHi.cc: Fix infrequent crash in certain decay modes 2007-10-17 10:10 ponyisi * Class/EvtVPHOtoVISRHi.cc: Assert if daughters in VPHOTOVISRHI are listed in an order that the code doesn't treat properly 2007-10-18 22:50 ryd * Class/EvtPHOTOS.cc: Store 4-vectors pre-Photos. 2008-01-09 12:34 ponyisi * Class/EvtDDalitz.cc: Add Qing He's implementation of Belle's D0 -> anti-K0 pi+ pi- Dalitz modelRecall one of the largest discrepancies is :

D+ to K0S pi+ pi+ pi-

Maybe in the Dalitz structure? … No.

-

For the

-

Todo:

-

Generate more events in mode D+ => K0S pi+ pi+ pi-

Also mode D- -> KKpi

-

Generate double tag sample D+ => K0S pi+ pi+ pi- and D0 -> Kpi

Test whether the generic side has something to do with the three body decay.

-

MC efficiencey table

Proceed with the mechanics for producing the relevant sample (3X more) and the comparison with 281/pb.

- Make NTuple for Generic MC in the new release

- Use square root scale plots

-

Compare table with 537/pb data including errors

- Derive the error equations

- Show David the equations and the code

-

Generate more events in mode D+ => K0S pi+ pi+ pi-

2009-12-03

- Trace the source of the MC difference

-

Process full data sample

- Finished single tag fits

- Updating the fitting plots page …

2009-11-19

- Trace the source of the MC difference

-

Process full data sample

- Finished processing the ntuple for the full data sample (skipped some trouble events)

- Get the yields for just the new data … in progress

- Investigate the time consuming for the trouble events … in progress

- Todo: Add the 18 channels and do the fit

2009-11-12

-

Trace the source of the MC difference

-

News about the CLEOC constants Beam Energy Shift

Todo: Need to use the the PostPass2 module in the new release … in progress.

-

News about the CLEOC constants Beam Energy Shift

-

Process full data sample

-

Avoid the memeory leak in the code HadronicDNtupleProc.cc

// *missingMassObjects.loosePi0List = TurnPhdPi0sIntoNavPi0ToGGs(loosePi0table, showtable);

Still wasting time on this event so far …

Run Event Time (old) New 221242 6470 1:30 1:30 221705 29678 3:10 3:33 Todo: run the code on the rest of the trouble events in data44 and data46

-

Avoid the memeory leak in the code HadronicDNtupleProc.cc

2009-11-05

-

Progress and Issues

-

Trace the source of the MC difference

- Add chi-square to the grand comparison table link

-

Virtual photon energy

Original New Release Old CLEOG Const Four-vector Mean 3.77330 3.77405 3.77405 3.77334 Sigma 2.15515 2.18113 2.17982 2.15241 From this table, we can see that using the four-vector from the old EvtGen dose reproduce the same energy as original

- BeamEnergyProxy debug (working on it)

-

Trace the source of the MC difference

-

Data43 trouble events

Skip events > 15 min…

Run Event Time 221242 6470 1:30 221295 19061 1:00 221493 61358 1:30 221705 29678 3:10 221715 65978 0:23 221963 7013 0:17 221988 23888 0:22 222131 8240 1:00 222279 65673 1:36 222544 42858 0:38 223051 28048 0:17 Job finished OK.

Job 1579825 (dtuple-data43.sh) Complete User = xs32 Queue = all.q@lnx187.lns.cornell.edu Host = lnx187.lns.cornell.edu Start Time = 11/04/2009 09:55:51 End Time = 11/05/2009 05:48:58 User Time = 16:01:55 System Time = 00:15:03 Wallclock Time = 19:53:07 CPU = 16:16:58 Max vmem = 1.848G Exit Status = 0

Output root files:

-rw-r--r-- 1 xs32 cms 941M Nov 5 05:48 data43_dskim_evtstore_1.root -rw-r--r-- 1 xs32 cms 1.8G Nov 4 23:49 data43_dskim_evtstore.root

-

Similar trouble in data44 and data46

The possible solutions are :

- Break the job into two

- Skip the bad events (manual or with tools)

2009-10-29

-

Progress and Issues

-

Trace the source of the MC difference

- Check the EvtGen in the old release with DEBUG info

-

Trace the source of the MC difference

They use the same: DBBeamEnergyShift using version 1.11

-

Trace the BeamEnergyProd

Look up source code MCBeamParametersProxy.cc:

159 // -------------- beam energy and energy spread ---------------- 160 // mean energy for each beam 161 162 FAItem< BeamEnergy > iBeamEnergy; 163 extract( iRecord.frame().record(Stream::kStartRun), iBeamEnergy ); 164 165 const double beamEnergy ( iBeamEnergy->value() ) ;

Message from Brian:

I think BeamEnergy comes from https://www.lepp.cornell.edu/restricted/webtools/cleo3/source/Offline/src/CesrBeamEnergyProd/Class/BeamEnergyProxy.cc#155 This extracts CesrBeamEnergy from the startrun record https://www.lepp.cornell.edu/restricted/webtools/cleo3/source/Offline/src/CesrBeamEnergyProd/Class/BeamEnergyProxy.cc#136 and then if the flag is selected, corrects it here: https://www.lepp.cornell.edu/restricted/webtools/cleo3/source/Offline/src/CesrBeamEnergyProd/Class/BeamEnergyProxy.cc#142 All this is in the producer CesrBeamEnergyProd.

The startrun record is more of a concept than a place. It comes after a begin run but before the first event, but doesn't have to be located in the event file (although it usually is). information "in" a record is not necessarily, well, "in" it. There can be other sources of information in the startrun record, and in this case CesrBeamEnergy comes from RunStatistics, the constants db that is written online, which I am pretty sure is a different db than the one we generally use offline. CerBeamEnergy is meant to be the value that CESR gave us based on their magnet settings.

-

Data43 trouble events

-

Disable the missing mass calculation

Commented out lines in HadronicDNtupleProc.cc:

// CDPi0List pi0List; // missingMassObjects.pi0List = new CDPi0List(); // *missingMassObjects.pi0List=pi0table;

Got "segmentation violation" error => data43.txt

-

Disable the missing mass calculation

2009-10-22

-

Progress and Issues

-

Trace the source of the MC difference

-

Set DEBUG level in Suez (Suggestion from DLK)

Tested 10 events and compare the log file. Old constant vs. New constants

%% DEBUG-ConstantsPhase2Delivery.CP2SourceBinder: fdbName /nfs/cleo3/constants/db/Codi Tag PASS2-C_5 %% DEBUG-ConstantsPhase2Delivery.CP2SourceBinder: fdbName /nfs/cleo3/constants/db/Codi Tag PASS2-C_6

Looks they are different. But why the Beam Energy still does not change?

- Check the EvtGen Code?

-

Set DEBUG level in Suez (Suggestion from DLK)

-

Investigate the trouble events in data43

-

Getting the Bad Event List

if ( tuple.run == 221242 && tuple.event == 4599 || tuple.run == 221242 && tuple.event == 6470 || tuple.run == 221295 && tuple.event == 19061 || tuple.run == 221384 && tuple.event == 17807 || tuple.run == 221413 && tuple.event == 54964 || tuple.run == 221438 && tuple.event == 37151 || tuple.run == 221477 && tuple.event == 57536 || tuple.run == 221492 && tuple.event == 62595 || tuple.run == 221493 && tuple.event == 47146 || tuple.run == 221493 && tuple.event == 47852 || tuple.run == 221493 && tuple.event == 60003 || tuple.run == 221493 && tuple.event == 60017 || tuple.run == 221493 && tuple.event == 60067 || tuple.run == 221493 && tuple.event == 60096 || tuple.run == 221493 && tuple.event == 60102 || tuple.run == 221493 && tuple.event == 60198 || tuple.run == 221493 && tuple.event == 60221 || tuple.run == 221493 && tuple.event == 60282 || tuple.run == 221493 && tuple.event == 60301 || tuple.run == 221493 && tuple.event == 60465 || tuple.run == 221493 && tuple.event == 60479 || tuple.run == 221493 && tuple.event == 60628 || tuple.run == 221493 && tuple.event == 60649 || tuple.run == 221493 && tuple.event == 60653 || tuple.run == 221493 && ( tuple.event <= 60653 || tuple.event >= 75516) || tuple.run == 221311 && tuple.event == 22714 ) { report( NOTICE, kFacilityString ) << "Bypassing ... Run: " << tuple.run << " Event: " << tuple.event << endl; return ActionBase::kFailed; }Trouble Run in 221493. Events make the job abort:

>> Tue Oct 20 16:04:05 2009 Run: 221493 Event: 60705 Stop: event << %% NOTICE-ConstantsPhase2Delivery.DBCP2Proxy: DBEIDPDFBinning using version 1.1 %% NOTICE-ConstantsPhase2Delivery.DBCP2Proxy: DBEIDPDFFunction using version 1.2 %% NOTICE-ConstantsPhase2Delivery.DBCP2Proxy: DBEIDWeightingFactor using version 1.1 %% INFO-C3ccProd.CcShowerAttributesProxy: Running with CC run-to-run corrections %% INFO-C3ccProd.CcShowerAttributesProxy: Running with CC hot list suppression available %% INFO-CCGECS: CC Energy-dependent calibration version of Jan 2004

Need to use the SkipBadEvent package to avoid compiling every time.

-

Getting the Bad Event List

-

Trace the source of the MC difference

-

Plans

-

CLEOG constants

- Check the EvtGen in the old release with DEBUG info

- Trace the MCProxyBeamEnergyProd on how to produce

-

Data43 trouble events

- Disable the missing mass calculation

- Use SkipBadEvent package (if there are still bad events)

-

CLEOG constants

2009-10-15

-

Progress and Issues

-

Trace the source of the MC difference

-

CLEOG constants still using the old version

Posting on HyperNews => link

Are there any other way to test the old constants in addition to the cleog log file?

-

CLEOG constants still using the old version

-

Trace the source of the MC difference

- Any Change in the EvtGen code?

- Investigate the trouble events in data43

-

Event Display for Run: 221242 Event: 4599

(Dan helped me to stage the raw info of this run from the Tape.)

In the log file:

>> Tue Oct 13 10:46:43 2009 Run: 221242 Event: 4599 Stop: event << %% NOTICE-Processor.HadronicDNtupleProc: Hard limit on number of DD candidates exceeded

-

Modify code to bypass this event

In HadronicDNtupleProc.cc:

if( tuple.nddcand >= MAXNDDCAND ) { report( NOTICE, kFacilityString ) << "Hard limit on number of DD candidates exceeded" << endl; return ActionBase::kFailed; // added by xs32 }Only reduced CPU time for 7 minutes.

Test Job start Job end Duration First Oct 13 10:46:43 2009 Oct 13 11:10:34 2009 23' 51" Second Oct 14 08:12:04 2009 Oct 14 08:28:43 2009 16' 39" -

What is the right way to bypass?

Comment from Jim and David: The duration is actually the time spend on that event before reaching the maximum DD candidate limit. So, it is not a measure of the effectiveness of this method. Try to skip these events if they are not too many.

-

What is the right way to bypass?

-

Plan

-

CLEOG constants

- Ask Steve Blusk

- Contact DLK after next Tuesday

-

Data43 trouble events

- Use "Bad Event List" in the code

-

CLEOG constants

Date: 2011-01-06 11:38:08 EST